The Documentation Gap: Why AI Coding Assistants Generate Outdated Code

AI coding assistants have revolutionized software development, making coding more accessible and efficient. However, a significant challenge persists that affects both professional developers and "vibe coders" who use AI to build products without deep programming expertise: the generation of outdated, deprecated, or incorrect code.

The Scale of the Problem

Recent empirical research provides clear evidence of this challenge:

According to a comprehensive analysis by Liu et al. (2023), semantic and logical errors appear in 33-57% of general code generated by AI assistants, with this figure rising to over 80% in machine learning tasks1. Many of these errors stem from outdated knowledge of framework APIs and best practices.

Spracklen et al. (2024) documented that AI assistants frequently reference non-existent packages or APIs—termed "hallucinations"—in 5.2% of commercially generated code and up to 21.7% of open-source projects2. This translates directly to wasted development time debugging non-existent methods and classes.

Why This Happens

The root cause is straightforward: AI models are trained on historical codebases with fixed knowledge cutoff dates. As Tambon et al. (2024) note, "the error distribution directly correlates with the distance between training data and current framework versions"3.

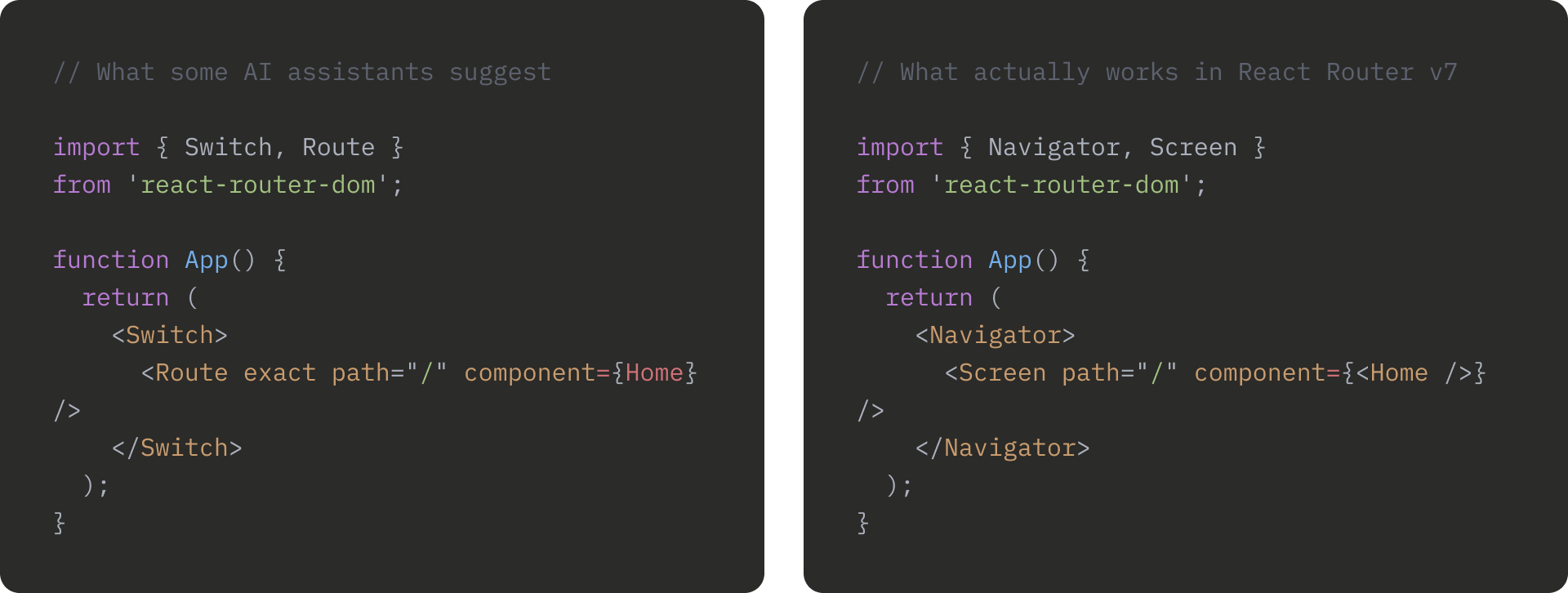

When frameworks release new versions or deprecate APIs after the model's training period, the AI continues suggesting patterns that no longer work:

The Impact on Different Developer Types

Even the most advanced models of 2025 still struggle with code correctness. Research by Dou et al. shows correctness rates of only 63-78% on standard coding tasks across leading models4. This affects different developers in various ways:

- Professional developers lose time debugging non-functional code

- Product builders face delays in shipping features

- "Vibe coders" may be unable to identify why suggested code doesn't work

- Teams experience increased technical debt when deprecated approaches make it into production

Approaches to Solving the Documentation Gap

Several solutions have emerged to address this challenge:

1. Manual Verification

Manually checking AI suggestions against current documentation ensures accuracy but reduces productivity gains.

Pros: Highly reliable, educationalCons: Time-consuming, defeats part of the purpose of using AI

2. Prompt Engineering

Wen et al. (2024) demonstrated that carefully constructed prompts that include version specifications and documentation snippets improved code correctness by 13-24%5.

Pros: No additional tools requiredCons: Requires expertise, token limitations, still not fully reliable

3. External Knowledge Layers

A more recent approach involves MCP (Model Context Protocol) servers that connect AI assistants to current documentation sources.

Pros: Maintains AI productivity benefits while improving accuracyCons: Requires integration with existing tools

4. Automated Validation

Fan et al. demonstrated systems that automatically detect and repair errors in AI-generated code, fixing 42% of errors in their study6.

Pros: Automated, requires minimal user effortCons: Not perfect, might miss context-specific issues

MCP Servers: Bridging the Documentation Gap

MCP servers represent a promising approach to solving the documentation currency problem. These systems sit between developers and AI coding assistants, providing real-time, version-specific documentation.

Companies like Anthropic have developed the Model Context Protocol to standardize how AI assistants access external knowledge sources. This has enabled solutions like Keen that connect AI coding tools to current framework documentation.

These systems work with any MCP-compatible AI assistant (including many popular coding tools) and provide:

- Real-time access to updated documentation

- Version-specific framework information

- Reduced hallucinations and deprecated method suggestions

- Improved code generation without sacrificing productivity

Conclusion

The documentation currency problem in AI-generated code is well-documented in research and experienced by developers daily. While no perfect solution exists yet, understanding the limitations of AI coding assistants is crucial for effective use.

Whether you opt for manual verification, enhanced prompting, or MCP-based solutions like Keen, addressing the knowledge gap between AI training data and current documentation is essential for productive development with AI assistants.

As frameworks continue to evolve rapidly, the ability to connect AI to current documentation will likely become an increasingly important part of the development workflow.

Footnotes

- Liu, J., Xia, C., Wang, Y., & Zhang, L. (2023). "Is Your Code Generated by ChatGPT Really Correct? Rigorous Evaluation of Large Language Models for Code Generation." Neural Information Processing Systems.

- Spracklen, J., Wijewickrama, R., Sakib, N., Maiti, A., Viswanath, B., & Jadliwala, M. (2024). "We Have a Package for You! A Comprehensive Analysis of Package Hallucinations by Code Generating LLMs."

- Tambon, F., Dakhel, A.M., Nikanjam, A., Khomh, F., Desmarais, M.C., & Antoniol, G. (2024). "Bugs in Large Language Models Generated Code: An Empirical Study."

- Dou, S., Jia, H., Wu, S., et al. (2024). "What's Wrong with Your Code Generated by Large Language Models? An Extensive Study."

- Wen, H., Zhu, Y., Liu, C., Ren, X., Du, W., & Yan, M. (2024). "Fixing Code Generation Errors for Large Language Models."

- Fan, Z., Gao, X., Roychoudhury, A., & Tan, S.H. (2022). "Improving Automatically Generated Code from Codex via Automated Program Repair."